Accurate localization is essential for any mobile robot, and achieving it requires combining data from multiple sensors in a reliable way.

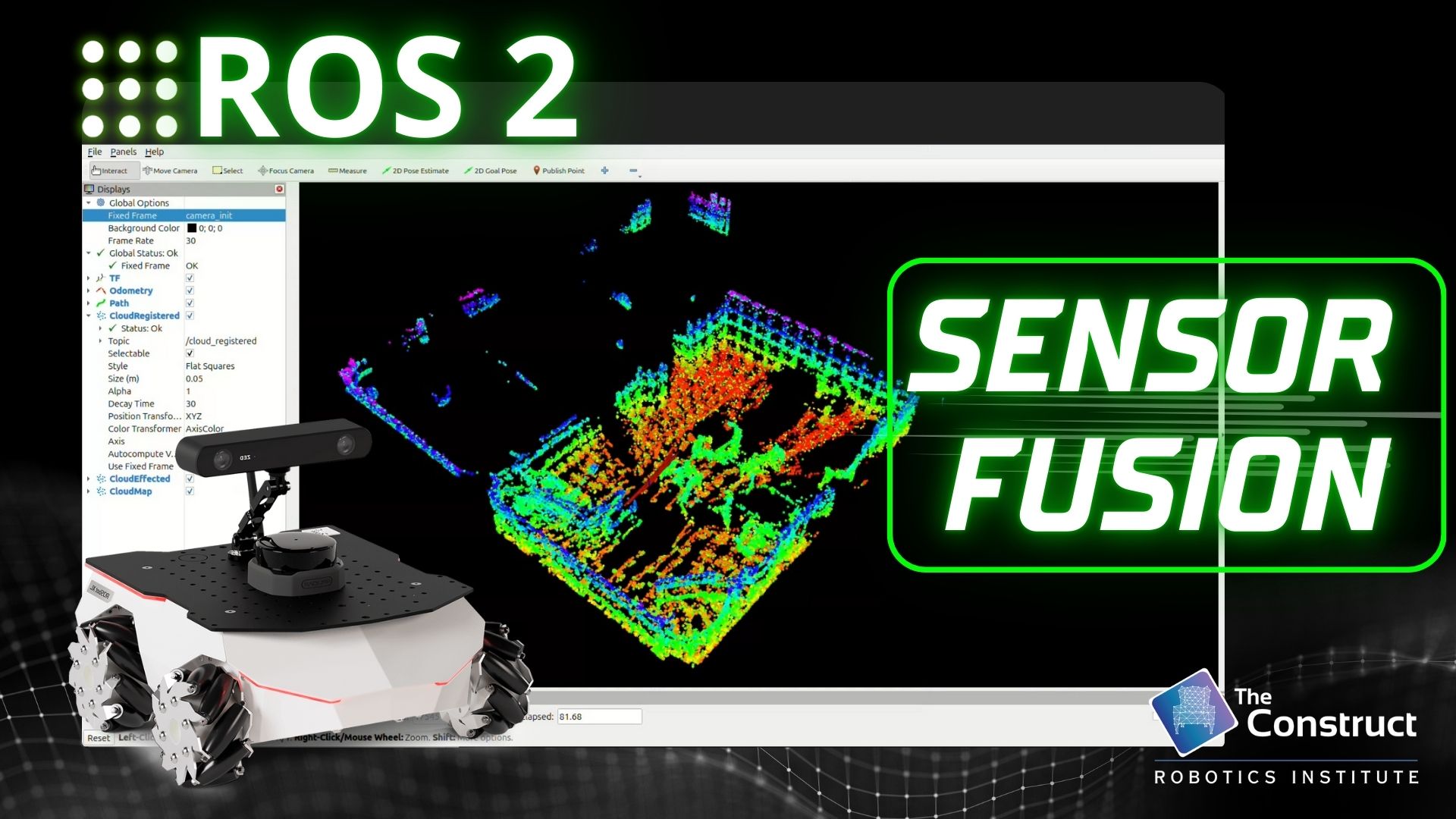

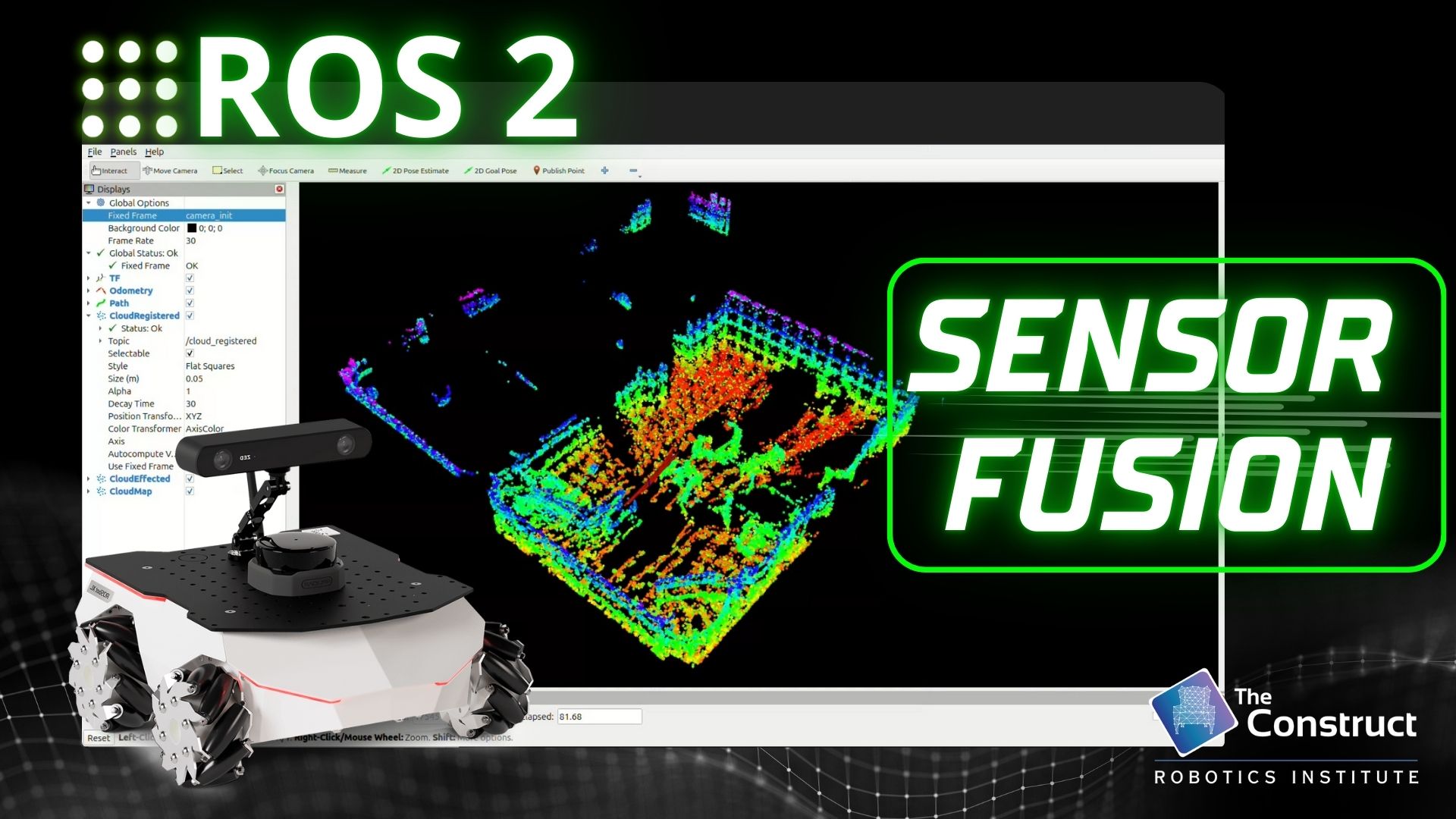

In this open class, you’ll learn how to perform sensor fusion in ROS 2 using the widely adopted robot_localization package.

We’ll break down how robot_localization works, how to configure it for different sensor setups, and how to integrate it into a full ROS 2 navigation pipeline. Throughout the session, we’ll work with the ROSBot XL robot and walk through practical examples of fusing IMU, wheel odometry, and other available sensors to produce a stable, continuous state estimate.

Learning points: